In electrical power system design, transformer rating is often considered the primary criterion for ensuring adequate power supply to connected loads. While transformer capacity defines the maximum load that can be handled without thermal damage, it does not guarantee that the required voltage will be effectively delivered to end-use equipment. Factors such as cable length, conductor size, load characteristics, and power factor significantly influence voltage levels across the distribution network.

A voltage drop study is therefore essential to evaluate the actual voltage available at load terminals under normal and starting conditions. Without this analysis, systems that appear adequate based on transformer rating alone may experience poor performance, equipment malfunction, increased losses, and reduced reliability. This study ensures compliance with applicable standards, optimal equipment operation, and overall system efficiency.

What Is Transformer Rating?

Transformer rating refers to the maximum power a transformer can safely handle under specified operating conditions. It is expressed in kVA or MVA and is determined based on thermal limits, insulation strength, and cooling capability. The primary objective of transformer rating is to ensure that the transformer operates safely without overheating.

However, Transformer Rating only addresses the transformer itself. It does not consider the electrical path between the transformer and the load. Factors such as cable impedance, distance to load, load distribution across feeders, and starting currents of motors are not included in transformer rating calculations. This leads to a common misconception that increasing kVA capacity will automatically solve voltage-related problems, which is rarely true in real installations.

Why Transformer Rating Alone Is Not Enough?

Transformer rating ensures adequate capacity at the transformer terminals, but voltage losses begin as soon as current flows through cables. As power travels through conductors, voltage drops due to resistance and reactance. In systems with long cable runs or high current demand, this voltage drop can be substantial.

Oversizing a transformer does not eliminate voltage drop because voltage loss is primarily influenced by cable characteristics and current flow, not transformer size. As a result, motors may draw higher current and run hotter, lighting systems may experience dimming, PLCs and control panels may malfunction, and protective devices may trip unnecessarily. These issues are often mistaken for equipment faults when the real cause is poor voltage distribution. A Voltage Drop Study exposes these hidden problems before they lead to failures.

What Is Voltage Drop

Voltage drop is the reduction in voltage that occurs when electrical current flows through conductors. In Voltage Drop in Power Systems, this phenomenon is unavoidable, but it must be controlled to ensure proper equipment operation. The extent of voltage drop depends on cable length, conductor size, load current, and power factor.

Industry standards specify acceptable voltage drop limits to maintain efficiency and reliability. When voltage drop exceeds these limits, equipment may continue operating but under stressed conditions. Over time, this results in higher losses, increased heating, and reduced equipment life. Understanding this behaviour requires proper analysis, which is provided by a Voltage Drop Study.

What Is a Voltage Drop Study?

A Voltage Drop Study is an engineering analysis that evaluates voltage levels throughout an electrical distribution network. It calculates voltage losses from the source to various load points using actual cable parameters and realistic loading conditions.

This study assesses system performance under normal operation as well as peak load scenarios. A properly conducted Voltage Drop Study identifies weak points in cable sizing, highlights areas with excessive losses, and verifies whether equipment receives adequate voltage. It is commonly performed during system design, electrical audits, or when new loads are introduced. In industrial environments, it forms a critical part of broader Electrical Power System Studies.

Consequences of Ignoring Voltage Drop Study

Ignoring a voltage drop study during electrical system design can lead to serious operational, safety, and reliability issues, even when the transformer and equipment are adequately rated.

- Poor Equipment Performance

Insufficient voltage at load terminals can cause motors to operate inefficiently, leading to reduced torque, difficulty in starting, and unstable operation of sensitive equipment.

- Overheating and Reduced Equipment Life

Low voltage results in higher current draw to meet load demand, increasing losses and causing overheating of motors, cables, and transformers, which significantly shortens equipment lifespan.

- Nuisance Tripping of Protection Devices

Excessive current due to voltage drop may cause frequent and unnecessary tripping of circuit breakers and protective relays, affecting system reliability and process continuity.

- Increased Energy Losses

Higher current flow increases I²R losses in conductors, leading to energy wastage, reduced system efficiency, and higher operating costs.

- Failure to Meet Design Standards

Electrical codes and standards (IEC / IEEE) specify maximum permissible voltage drop limits. Ignoring voltage drop studies can result in non-compliance and rejection during inspections or audits.

- Costly Retrofitting and System Modifications

Undetected voltage drop issues often require expensive corrective measures such as upsizing cables, adding transformers, or installing voltage regulation equipment after commissioning.

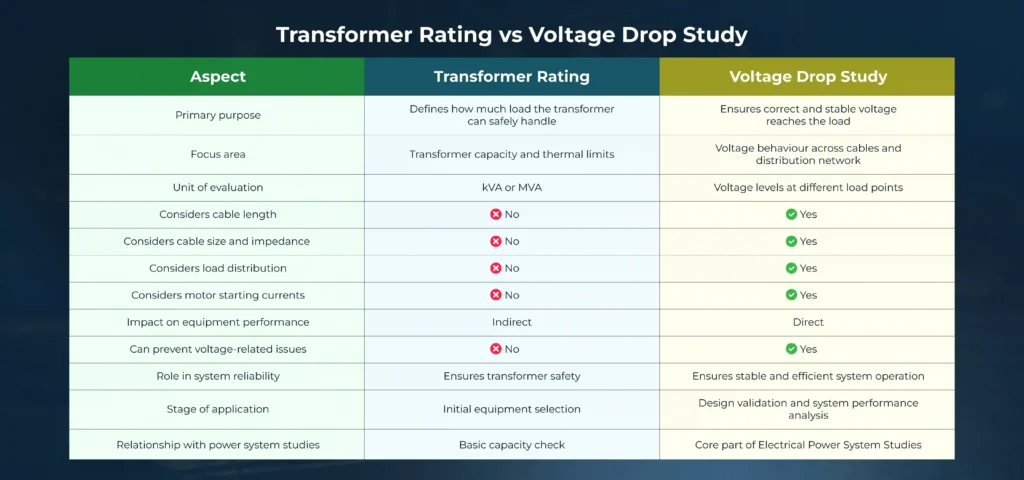

Transformer Rating vs Voltage Drop Study

When Voltage Drop Study Becomes Critical

The importance of a Voltage Drop Study increases significantly in systems with long-distance power distribution, large industrial plants, data centres, and control rooms. It becomes especially critical for systems with high starting current loads such as motors and compressors. Voltage drop analysis is also essential when existing systems are expanded or modified.

Without reassessing voltage behaviour, system upgrades can introduce new performance issues. A Voltage Drop Study ensures that voltage levels remain within acceptable limits even as load conditions change.

How Voltage Drop Study Improves System Reliability

A well-executed Voltage Drop Study improves system reliability by ensuring correct cable sizing and stable voltage at the load end. Equipment operates closer to its design parameters, efficiency improves, and thermal stress reduces. Maintenance issues decrease, and future expansion can be planned with greater confidence.

In comprehensive Electrical Power System Studies, voltage drop analysis plays a key role in preventing hidden performance problems. This proactive approach shifts electrical management from reactive troubleshooting to predictive engineering.

Role of Power System Studies in Electrical Design

Voltage analysis delivers maximum value when combined with other evaluations such as load flow analysis, short circuit studies, protection coordination, and earthing and EMI assessments. Together, these studies provide a complete understanding of system behaviour under real operating conditions.

Manav focuses on delivering integrated Electrical Power System Studies that address not only capacity but also voltage quality, safety, and long-term reliability. This ensures that electrical systems perform consistently throughout their operational life.

Conclusion

Transformer rating alone is insufficient to ensure reliable and efficient power delivery to electrical loads. While it confirms the system’s thermal capacity, it does not account for voltage variations caused by conductor length, sizing, load characteristics, and operating conditions. A voltage drop study is therefore a critical component of electrical system design, as it verifies that acceptable voltage levels are maintained at all load points in accordance with applicable standards.

By incorporating voltage drop analysis at the design stage, potential operational issues such as equipment malfunction, excessive losses, and premature failure can be effectively avoided. This approach ensures compliance, enhances system reliability, and minimizes future corrective costs, resulting in a robust and efficient electrical distribution system.

– Author: Vigneshwaran S